““This new law allows for testing of autonomous vehicles, or self-driving cars, by designees of an autonomous technology manufacturer, provided certain requirements are met. Prior to the public operating autonomous vehicles, a manufacturer is required to submit an application to DMV, and certify that specified insurance, vehicle safety, and testing standards have been met. The legislation authorizes regulations to specify any additional requirements deemed necessary by DMV, such as limitations on the number of autonomous vehicles deployed on the states highways, special vehicle registration and driver licensing requirements, and rules for the suspension, revocation, or denial of any license or approval issued by DMV pertaining to autonomous vehicles. (SB 1298/Padilla)””

- Why do I blog this? Being in California for the new year’s break, we had to check the new driving-related laws at the rental car office. This paragraph was quite interesting, although our rental wasn’t autonomous.

robot

“Rubber band AI”: “Rubber band AI refers to...

“Rubber band AI”: “Rubber band AI refers to an artificial intelligence found in titles such as racing or sports titles that is designed to prevent players from getting too far ahead of computer-controlled opponents. When done well, such AIs can ...

Read More“What? They’re Only Mechanical Men!” says...

“What? They’re Only Mechanical Men!” says Spock, in this old Star Trek coloring book shown on io9Why do I blog this? This kind of behavior with mechanical creature will definitely be more common, even if the robot won’t be humanoid (see this s...

Read MoreWeird: Disney Research in Zurich, Switzerland, just developed a...

Weird: Disney Research in Zurich, Switzerland, just developed a new process of robot making that enables a physical human face to be cloned onto a robot. The process is called “Physical Face Cloning,” and it involves the scanning of a human head. ...

Read More“Hard time on planet Earth” is an American science...

“Hard time on planet Earth” is an American science fiction series that aired on CBS in 1989 and caught my attention at the time because of the weird floating robot that constantly moved around the main character. According to the Wikipedia: Afte...

Read MoreDespite its flawed title, reading My Life as a Telecommuting...

Despite its flawed title, reading My Life as a Telecommuting Robot was intriguing enough. Some excerpts: During my robot days, I interacted with co-workers I’d never met before, as well as others I hadn’t talked with in years; each of them was com...

Read MoreCat + robot

Cat + robot

Read MoreRobot Mori: a curious assemblage from the Uncanny Valley

Perhaps the weirdest piece of technology I've seen recently is this curious assemblage exhibited at Lift in Seoul: it's called "Robot Mori" and, as described by Advanced Technology Korea:

Perhaps the weirdest piece of technology I've seen recently is this curious assemblage exhibited at Lift in Seoul: it's called "Robot Mori" and, as described by Advanced Technology Korea:

"Meet Mori, the alter ego of a lonely boy who wants to go out and make friends but is too shy. Mori, on the other hand, isn’t shy at all. He swivels his head, looking around for nearby faces. Once he detects your face, he takes a picture and uploads it to his Flickr page."

Why do I blog this? The focus on face, and the visual aesthetic produced by the whole device is strikingly intriguing. Definitely, close to the Uncanny Valley... which made me realize that whatever sits in the valley often belong to the New Aesthetic trope. I personally find it fascinating that robots can have this kind of visual appearance and wonder whether some people might get use to that after a while... in the same sense that they got used to moving circle pads as vacuum cleaners.

Bot activity on Wikipedia entries about Global Warming

Looking for material for an upcoming speech, I ran across this research project (by digital methods initiative) that inquires into the composition of issues on Wikipedia by contributors, and consequences for the (possibility) of carrying out public debate and controversy on articles surrounding Global Warming.

The bit that intrigued me is exemplified by the following diagrams... that look into the role of bot in article interventions and the link with controversies:

No crazy take-over from bots but I would find it intriguing to observe the evolution of this.

Why do I blog this? This is a fascinating topic to observe. I see it as an indicator of something that can have more and more implications, especially on the production of cultural content. One can read more about this in Stuart Geiger's article "The lives of bots:

"Simple statistics indicate the growing influence of bots on the editorial process: in terms of the raw number of edits to Wikipedia, bots are 22 of the top 30 most prolific editors and collectively make about 16% of all edits to the English-language version each month.

While bots were originally built to perform repetitive tasks that editors were already doing, they are growing increasingly sophisticated and have moved into administrative spaces. Bots now police not only the encyclopedic nature of content contributed to articles, but also the sociality of users who participate in the community"

How socialbots could influence changes in the social graph

Socialbots: voices from the fronts, in the last issue of ACM interactions, is an interesting multi-author piece about how socialbots, programs that operate autonomously on social networking sites recombine relationships within those sites and how their use may influence relationships among people. The different stories highlighted here shows how "digitization drives botification" and that when socialbots become sufficiently sophisticated, numerous, and embedded within the human systems within which they operate, these automated scripts can significantly shape those human systems. The most intriguing piece is about a competition to explore how socialbots could influence changes in the social graph of a subnetwork on Twitter. Each team of participants were tasked to build software robots that would ingratiate themselves into a target network of 500 Twitter users by following and trying to prompt responses from those users. Some excerpts about the strategies employed:

"On tweak day we branched out in some new directions:

- Every so often James would send a random question to one of the 500 target users, explicitly ask for a follow from those that didn’t already follow back, or ask a nonfollowing user if James had done something to upset the target.

- Every time a target @replied to James, the bot would reply to them with a random, generic response, such as “right on baby!”, “lolariffic,” “sweet as,” or “hahahahah are you kidding me?” Any subsequent reply from a target would generate further random replies from the bot. James never immediately replied to any message, figuring that a delay of a couple of hours would help further explain the inevitable slight oddness of James’s replies. Some of the conversations reached a half-dozen exchanges. - James sent “Follow Friday” (#FF) messages to all of his followers but also sent messages to all of his followers with our invented #WTF “Wednesday to Follow” hash tag on Wednesday. James tweeted these

shoutouts on Wednesday/Friday New Zealand time so that it was still Tuesday/Thursday in America. The date differences generated a few questions about the date (and more points for our team)."

Why do I blog this? Because this kind of experiments can lead to informative insights about socialbots behavior and their cultural implications. The paper is a bit short about it but it would be good to know more about the results, people's reactions, etc. This discussion about software behavior is definitely an important topic to address when it comes to robots, much more than the ones about zoomorphic or humanoid shapes.

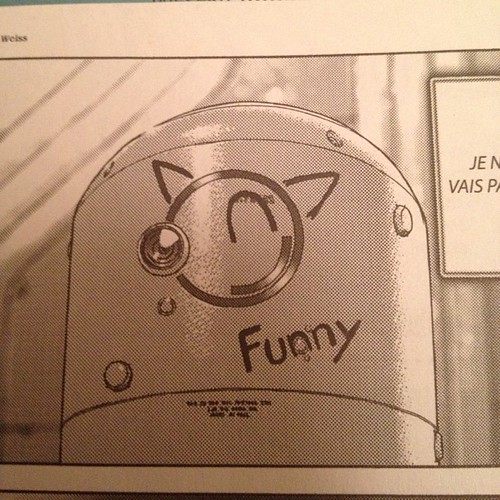

One-eyed robot

Found in Hotel by Boichi, a Japanese manga that I only found in French.

Found in Hotel by Boichi, a Japanese manga that I only found in French.

Why do I blog this? I like the way the one-eyed face has been turned into something more human-readable through basic pencil drawings. This may be the equivalent of the "Transmetropolitan" smiley face.

Filming, from the object point of view

This fellow, encountered at Monument Valley, AZ two week ago, took plenty of time to install this little camera on his huge SUV, a somewhat robotic eye... (or, more likely, a proxy to capture souvenirs).

This fellow, encountered at Monument Valley, AZ two week ago, took plenty of time to install this little camera on his huge SUV, a somewhat robotic eye... (or, more likely, a proxy to capture souvenirs).

A brief chat with him allowed me to understand that he wanted to get an exhaustive view in the park. This led me to think about objects' viewpoint: the increasing use of this kind of camera (on bike and snowboard more generally) indeed enable to capture visual elements from a very specific angle. The results can be both dramatic or crappy but it's clearly curious to see the sort of traces produced.

Why do I blog this? This feature actually makes me think about robot perception, or how digitally-enabled artifact can perceive their environment. Of course, in this case, this is only a car with a camera... but I can't help thinking that this big robotic eye has a curious effect on observers. Perhaps it leads to this "human-robot intersubjectivities", the ‘signs of life’ that are exhibited by robots and that people perceive and respond to.

Robot fictions: entertainment cultures and engineering research entanglements

Yesterday in a "secret robot house" in Hatfield, in the suburbs of London, I gave a quick talk about how popular robot fictions influence the design process. The speech was about the propagation of the robot myth in engineering spheres and the influence of certain topics (robot idioms, shapes, behavior and automation)... and how they appear as inevitable tropes in technological research. I tried to uncover what is hidden behind this phenomenon and looked at the complex interactions between entertainment cultures (Science-Fiction mostly) and scientific research. I've uploaded the slides on Slideshare:

[slideshare id=8330333&doc=robot-hatfield2011-110616134210-phpapp01]

Thanks Alex for the invitation!

Different kinds of feedback of what a robot is perceiving

One of the talk at the Robolift11 conference that I found highly inspiring was the one by Pierre-Yves Oudeyer. In his presentation, he addressed different projects he conducted and several topics he and his research team focuses on. Among the material he showed, he described an interesting experiment they conducted about how robot users are provided with different kind of feedback of what the robot is perceiving. Results from this study can be found in a paper called "A Robotic Game to Evaluate Interfaces used to Show and Teach Visual Objects to a Robot in Real World Condition. Their investigation is about the impact of showing what a robot is perceiving on teaching visual objects (to the robots) and the usability of human-robot interactions. Their research showed that providing non-expert users with a feedback of what the robot is perceiving is needed if one is interested in robust interaction:

"as naive participants seem to have strong wrong assumptions about humanoids visual apparatus, we argue that the design of the interface should not only help users to better under- stand what the robot perceive but should also drive them to pay attention to the learning examples they are collecting. (...) the interface naturally force them to monitor the quality of the examples they collected."

Why do I blog this? reading Matt Jones' blogpost about sensors the other day made me think about this talk at robolift. The notion of Robot readable world mentioned in the article is curious and it's interesting to think about how this perception can be reflected to human users.

A glimpse at the robolift program

A quick update about the robolift conference program, I've been building over the previous months. The event is in two weeks and I'm looking forward to see the presentations and debates! We finalized the line-up last week and here's the latest version:

A quick update about the robolift conference program, I've been building over the previous months. The event is in two weeks and I'm looking forward to see the presentations and debates! We finalized the line-up last week and here's the latest version:

- The Shape of robots to come: What should robots look like? Is it important that robots look like humans or animals? Are there any other possibilities? What alternatives are offered by designers? With Fumiya Iida (Bio-Inspired Robotics Laboratory, ETH Zürich), James Auger, (Auger-Loizeau) and Dominique Sciamma (Strate College)

- The social implications of robotics: What does it mean for society to have personal and socially intelligent robots? What are the consequences for people? What are the ethical challenges posed by robots that we can anticipate in the near future? With Cynthia Breazeal (MIT Medialab Personal Robots group), Wendell Wallach (Yale University: Interdisciplinary Center for Bioethics) and Patrizia Marti (Faculty of Humanities, University of Siena).

- Expanding robotics technologies: robot hacking, augmented humans and the military uses of robots. As usual with technologies, robots can be repurposed for other kinds of objectives: programmers « hack » them to test new opportunities, the military deploys them on the battlefield, and robotic technologies are adapted to « augment » the human body. What does this mean? What could be the consequences of such repurposing? With Noel Sharkey (Professor of AI and Robotics, University of Sheffield), Daniela Cerqui (Cultural anthropologist, University of Lausanne) and Daniel Schatzmayr (Robot hacker)

- Human-robot interactions: Robots seem to live either in the long-distant future or in the realm of research labs. This vision is wrong and these speakers will show us how nowadays people interact with them in Europe and in Japan. The session will also address how robots can be useful in developing or understanding our emotions. With Frédéric Kaplan (OZWE and Craft-EPFL), Fujiko Suda (Design ethnographer, Project KOBO) and Alexandra Deschamps-Sonsino (Evangelist, Lirec)

- Artificial intelligence: acquired versus programmed intelligence? Artificial Intelligence used a recurring objective of engineering. This session will give an overview of the recent progress in this field and the consequences for robotic technologies: How much pre-programming can you put into robotic intelligence? Can robots learn on their own? With Pierre-Yves Oudeyer (INRIA) and Jean-Claude Heudin (Institut International du Multimédia)

- The Future of robotics: This session will feature two talks about how robots might be in the future. From assistive care products to new forms of interactions, we will see tomorrow's technologies, their usages and applications. With Tandy Trower (Hoaloha Robotics) and Jean-Baptiste Labrune (Lab director at Bell Labs Alcatel-Lucent)

- Debate: Errare Humanoid est? Should robots look like humanoid? How do/will people interact with them? To conclude the conference, we will get back to the topic of the first session and discuss the importance of humanoid shapes in robotic development: Is it necessary? What are the limits and what opportunities? What could be the alternatives? With Bruno Maisonnier (Founder of Aldebaran Robotics) and Francesco Mondada (Researcher in artificial intelligence and robotics, EPFL).

Thanks to all the speakers who accepted to participate!

What robots are

As a researcher interested in human-technology interface, I have always been intrigued by robotics. My work in the field has been limited to several projects here and there: seminars/workshops organization about it, the writing of a research grant about human-robot interaction for game design (a project I conducted two years ago in France) and tutoring design students at ENSCI on a project about humanoid robots. If we include networked objects/blogjects in the robot field (which is not obvious for everyone), Julian and I had our share of work with workshops, talks and few prototypes at the near future laboratory.

Robolift, the new conference Lift has launched with French partners, was a good opportunity to go deeper in the field of robotics. What follows is a series of thoughts and notes I've taken when preparing the first editorial discussions.

"I can't define a robot, but I know one when I see one" Joseph Engelberger

The first thing that struck me as fascinating when it comes to robots is simply the definition of what "counts" as a robot for the people I've met (entrepreneurs in the field, researchers, designers, users, etc.) For some, an artifact is a robot only if it moves around (with wheels or foot) and if it has some powerful sensor (such as a camera). For other, it's the shape that matters: anthropomorphic and zoomorphic objects are much more "robotic" than spheres.

Being fascinated by how people define what is a robot and what kind of technical objects count as a robot, I often look for such material on the World Wide Web. See for example this collection of definitions and get back here [yes this is a kind of hypertext reading moment]. The proposed descriptions of what is a robot are all focused on different aspects and it's curious to see the kinds of groups you can form based on them. Based on the quotes from this website, I grouped them in clusters which show some characteristics:

What do these clusters tell us anyway? Of course, this view is limited and we cannot generalize from it but there are some interesting elements in there:

- The most important parameters to define a robot revolve around its goal, its mode of operation, its physical behavior, its shape and technical characteristics.

- Technical aspects seems less important than goal and mode of operation

- Defining a robot by stating what it isn't is also relevant

- While there seems to be a consensus on the appearance (humanoid!), goals and modes of operations are pretty diverse.

- Certain aspects are not considered here: non-humanoid robots, software bots, block-shaped, etc.

It's interesting to contrast these elements with results from a research study about the shapes of robot to come (which is a topic we will address at the upcoming Robolift conference).

I found this on BotJunkie few months ago, I ran across this interesting diagram extracted from a 2008 study from the Swiss Federal Institute of Technology in Lausanne:

"Researchers surveyed 240 people at a home and living exhibition in Geneva about their feelings on robots in their lives, and came up with some interesting data, including the above graph which shows pretty explicitly that having domestic robots that look like humans (or even “creatures”) is not a good idea, and is liable to make people uncomfortable."

Some of the results I find interesting here:

"Results shows that for the participants in our survey a robot looks like a machine, be it big or small. In spite of the apparent popularity of Japanese robots such as the Sony Aibo, the Furby and Asimo, other categories (creature, human, and animal) gathered only a small percentage. (...) The preferred appearance is therefore very clearly a small machine-like robot"

These results are consistent with another study by Arras and Cerqui. These authors looked at whether people would prefer a robot with a humanoid appearance. They found that "47% gave a negative answer, 19% said yes, and 35% were undecided"".

Why do I blog this? Preparing the upcoming robolift conference and accumulating references for potential projects about human-robot interactions.

"Robot renaissance map"

The Institute For The Future (IFTF) has just released an interesting map [PDF] of signals and forecasts about robotics:

The Institute For The Future (IFTF) has just released an interesting map [PDF] of signals and forecasts about robotics:

"After decades of hype, false starts, and few successes, smart machines are finally ready for prime time. (...) This map, and the associated series of written perspectives, are tools to help navigate the coming changes. As we scanned across ten application domains, seven big forecasts emerged. In the process, we also identified three key areas of impact where the robot renaissance will change our lives over the next decade.

In each domain we focus on three levels of impacts: (1) Robots helping humans understand ourselves (2) Robots augment human abilities (3) Robots automate human tasks"

Why do I blog this? Both because I am working on a project about robotics and due to my interest in how technologies fail and re-appear on a regular basis. I am not sure about the term "renaissance" and do not necessarily agree with some of the trends but there are some interesting aspects in this anyway. Above all, what I am interested in here is:

- The variation in terms of shape/forms and what is considered here as a "robot",

- The assumpttions made about humans needs and desires,

- The mix between engineering projects, quotes from sci-fi movies and pictures,

- The rhetorical tricks (present tense, "from XXX to XXX", "Rise", "Every machine", "self-manage".

Bruce Sterling on robots in acm interaction

Some excerpts from an interesting interview with Bruce Sterling about robots from acm interactions in 2005:

"AM: What do you think of as the most successful or surprising innovation in robotics in the past? BS: Well, robots are always meant to be “surprising,” because they are basically theater or carnival shows. A “successful” robot, that is to say, a commercially and industrially successful one, wouldn’t bother to look or act like a walking, talking human being; it would basically be an assembly arm spraying paint, because that’s how you get the highest return on investment out of any industrial investment make it efficient, get rid of all the stuff that isn’t necessary. But of course it’s the unnecessary, sentimentalized, humanistic aspects of robots that make robots dramatically appealing to us. There’s a catch-22 here.You can go down to an aging Toyota plant and watch those robot arms spray paint, but it’ll strike you as rote work that is dull, dirty, and dangerous—you’re not likely to conclude, “Whoopee, look at that robot innovation go!” When it’s successful, it doesn’t feel very robotic, because it’s just not dramatic. (...) AM: How do you think robots will be defined in the future? I’d be guessing that redefining human beings will always trump redefining robots. Robots are just our shadow, our funhouse-mirror reflection. If there were such a thing as robots with real intelligence, will, and autonomy, they probably wouldn’t want to mimic human beings or engage with our own quirky obsessions. We wouldn’t have a lot in common with them-we’re organic, they’re not; we’re mortal, they’re not; we eat, they don’t; we have entire sets of metabolic motives, desires, and passions that really are of very little relevance to any- thing made of machinery.

AM: What’s in the future of robotics that is likely very different from most people’s expectations? BS: Robots won’t ever really work. They’re a phantasm, like time travel or maybe phlogiston. On the other hand, if you really work hard on phlogiston, you might stumble over something really cool and serendipitous, like heat engines and internal combustion. Robots are just plain interesting. When scientists get emotionally engaged, they can do good work. What the creative mind needs most isn’t a cozy sinecure but something to get enthusiastic about."

Why do I blog this? Currently working on the program of the upcoming Robolift conference in France next March... led me to accumulate insights like these. Might also be interesting in my design course and for research projects about human-robot interactions.

Lift seminar@imaginove about robots/networked objects

A quick update on the Lift@home front, we're going to have a Lift seminar with Imaginove on September 29th in Lyon. We'll talk about how networked objects and robotics can offer an interesting playground for digital entertainment. The event will be in French and we'll have two speakers. Etienne Mineur from Editions Volumiques, a publishing house focusing on the paper book as a new computer platform, as well as a research lab on book, computational paper, reading, playing and their relation to new technologies. The second speaker, Pierre Bureau from Arimaz. will discuss how robots and networked objects can be connected to virtual environments to create innovation gameplays. I'll give an introduction about this field and moderate the session.

(A "beggar robot" encountered last week in Trento, created by Sašo Sedlaček)

(A "beggar robot" encountered last week in Trento, created by Sašo Sedlaček)

Very related to this, we are now officially working on a new conference about robotics. The "Robolift" conference will take place on 23-25 March in Lyon, during the first edition of inno-robo, the European trade show dedicated to robotic technologies organized by the French Robotics Association Syrobo.

Robot memory in Blade Runner

Roy Batty, in Blade Runner, who tells Deckard about the things he saw in his life and how all those memories would vanish. He is about to die and give this memorable final speech:

Roy Batty, in Blade Runner, who tells Deckard about the things he saw in his life and how all those memories would vanish. He is about to die and give this memorable final speech:

"I've seen things you people wouldn't believe. Attack ships on fire off the shoulder of Orion. I've watched c-beams glitter in the dark near the Tannhäuser Gate. All those ... moments will be lost in time, like tears...in rain. Time to die."

...when a replicant/robot loved his life and tells what it meant.

Why do I blog this? a curious quote to be used at some point.