Finally had some time to watch Moon by Duncan Jones yesterday evening. Certainly a good sci-fi movie with different implications to ruminate and ponder. Slow and with a nice music. I found the props quite curious and not necessarily super showy.

Finally had some time to watch Moon by Duncan Jones yesterday evening. Certainly a good sci-fi movie with different implications to ruminate and ponder. Slow and with a nice music. I found the props quite curious and not necessarily super showy.

One of the most intriguing feature of the movie is certainly GERTY, a robot whose voice is played by Kevin Spacey. Based on the Cog project, there is both a prop for static scenes and CG when it's moving around.

A convincing character, GERTY has a limited AI, as discussed by the director in Popular Mechanics:

"There is limited AI. GERTY is not wholly sentient. He really is a system as opposed to a being in his own right--that was one of the things I wanted to get across. The audience, and the different Sams, bring their own baggage to GERTY. They're the ones who anthropomorphize him and basically make him out to be more than he is. GERTY's system is very simple: He's there to look after Sam and make sure that he survives for 3 years. That's it. When you start watching the film, you're already making unwarranted assumptions about GERTY because of the HAL 9000 references and Kevin Spacey's slightly menacing voice. That's what the Sams do as well. The company itself, Lunar Industries, is nefarious. GERTY is not. He's doing his job. He has conversations with the company but he doesn't tell Sam because he's programmed not to. It's as simple as that. (...) The idea was to create a machine that was incorporating more than one type of sense data. So it had cameras for eyes, tactile fingertips and a moving robotic arm. It had an audio capture system. It was basically taking all of these various forms of data, giving it the eyes to see something and have the arm reach out and touch it in the right place"

See also some interesting elements about him from this interview in fxguide

Perhaps the most interesting aspect of GERTY (IMHO) is its smiley-face display to express its feelings. This little screen is meant to express the robot's emotion in a very basic ways with different permutations. Here again, it's good to read the director's intents:

"I use a lot of social networking sites. I’m on Twitter all the time. I use all these various forms of networking, including the text version of Skype. I tend to use smiley faces to make sure people know that I’m joking. That’s my own reason for using it on Gerty. I also like the idea that Gerty’s designed by this company which doesn’t have much respect for Sam and treats him in a patronizing way. So they use smiley faces to communicate with him."

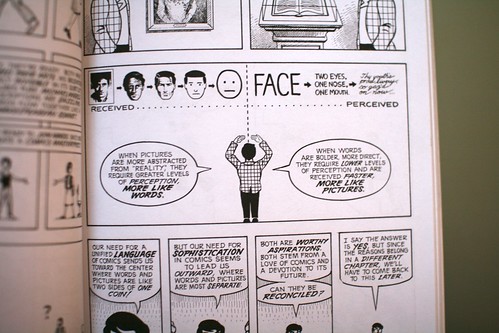

I really liked the way the smiley are used, a sort of simplistic (and patronizing as he mentionned) representation of an assistant. Very much reminiscent to Clippy. This use of smileys reminded me of the Uncanny Valley and this excerpt from Scott McCloud's Understanding Comics: The Invisible Art:

For McCloud, a smiley face is the ultimate abstraction because it could potentially represent anyone. As he explained, "The more cartoony a face is…the more people it could be said to describe". Besides, it's really curious anthropomorphically because the robot design has two characteristics: the smiley face (with eyes and a mouth) and a camera. It's quite funny because in lots of sci-fi movies/comics, the camera looks as an eye and is sometimes perceived by people as having the same function. In Moon, the combination of the camera and the smiley face makes it very quirky.

Why do I blog this? trying to make some connections between this movie I saw and some interesting elements about robot design.

It seems that people wanted to combine toys and robots for quite a long time, as attested by this

It seems that people wanted to combine toys and robots for quite a long time, as attested by this

(pictures taken from

(pictures taken from

Why do I blog this? because these robots looks amazing for different reasons: (1) there not that zoomorphic (I don't believe the added value of a robot lies in the isomorphism with an animal), (2) the way the behavior of the robot works is based on an artifical intelligence model that I found more interesting than other devices).

Why do I blog this? because these robots looks amazing for different reasons: (1) there not that zoomorphic (I don't believe the added value of a robot lies in the isomorphism with an animal), (2) the way the behavior of the robot works is based on an artifical intelligence model that I found more interesting than other devices).