The Twitter thread that I mentioned the other day about AI as a Lovecraftian creatures was commented by various people on the blue bird media. One of them pointed to this other kind of representation for Artificial Intelligence algos:

Created a month after ChatGPT release, this octopus-like creature is a Shoggoth (hence the term "Shoggoth memes" for variations on this representation) obviously comes from HP Lovecraft's lore commonly known as Cthulhu mythos.

Reacting to my post on Mastodon, Justin showed me this article in the New York Times, by Kevin Roose which discusses the use of this entity as a recurring metaphor in essays and message board posts about AI risk and safety. Some excerpts from Roose's paper:

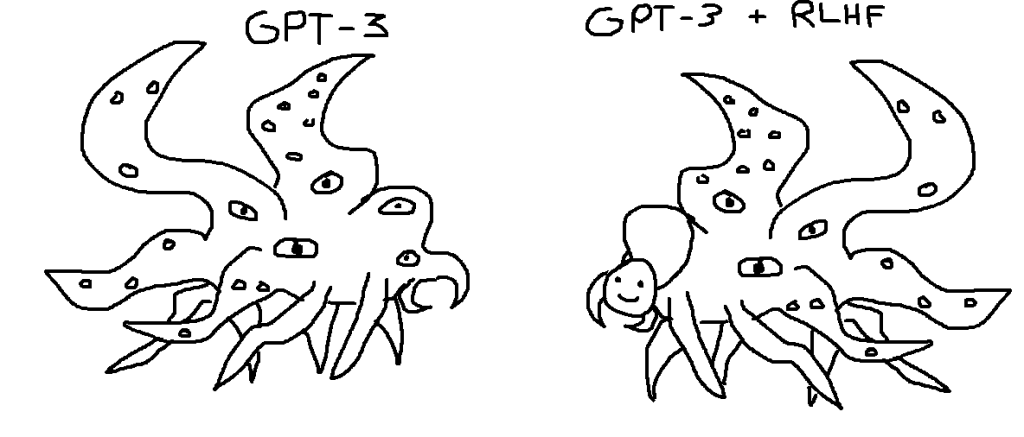

In a nutshell, the joke was that in order to prevent A.I. language models from behaving in scary and dangerous ways, A.I. companies have had to train them to act polite and harmless. One popular way to do this is called “reinforcement learning from human feedback,” or R.L.H.F., a process that involves asking humans to score chatbot responses and feeding those scores back into the A.I. model. Most A.I. researchers agree that models trained using R.L.H.F. are better behaved than models without it. But some argue that fine-tuning a language model this way doesn’t actually make the underlying model less weird and inscrutable. In their view, it’s just a flimsy, friendly mask that obscures the mysterious beast underneath.

@TetraspaceWest, the meme’s creator, told me in a Twitter message that the Shoggoth “represents something that thinks in a way that humans don’t understand and that’s totally different from the way that humans think. Comparing an A.I. language model to a Shoggoth, @TetraspaceWest said, wasn’t necessarily implying that it was evil or sentient, just that its true nature might be unknowable. “I was also thinking about how Lovecraft’s most powerful entities are dangerous — not because they don’t like humans, but because they’re indifferent and their priorities are totally alien to us and don’t involve humans, which is what I think will be true about possible future powerful A.I.”

Eventually, A.I. enthusiasts extended the metaphor. In February, the Twitter user @anthrupad created a version of a Shoggoth that had, in addition to a smiley-face labeled “R.L.H.F.,” a more humanlike face labeled “supervised fine-tuning.”

Why do I blog this? As Kevin Roose discusses at the end of his piece, the interesting thing here is that developers, scientists and entrepreneurs working with this technology seem to be "somewhat mystified by their own creations". Not knowing precisely how these systems work, they translate their anxiety into such kind of metaphor, with peculiar connotations of weirdness and oddity. The NYT piece is also relevant on a different aspect that is dear to my heart: the vocabulary employed by practitioners, who says things like "glimpsing the Shoggoth" to refer to people taking a peek at such entities.