Blom, J. and Monk, A.F. (2003): Theory of Personalization of Appearance: Why Users Personalize Their PCs and Mobile Phones, Human-Computer Interaction, Vol. 18, No. 3, Pages 193-228, Human-Computer Interaction, Vol. 18, No. 3, Pages 193-228.

Abstract: Three linked qualitative studies were performed to investigate why people choose to personalize the appearance of their PCs and mobile phones and what effects personalization has on their subsequent perception of those devices. The 1st study involved 35 frequent Internet users in a 2-stage procedure. In the 1st phase they were taught to personalize a commercial Web portal and then a recommendation system, both of which they used in the subsequent few days. In the 2nd phase they were allocated to 1 of 7 discussion groups to talk about their experiences with these 2 applications. Transcripts of the discussion groups were coded using grounded theory analysis techniques to derive a theory of personalization of appearance that identifies (a) user-dependent, system-dependent, and contextual dispositions; and (b) cognitive, social, and emotional effects. The 2nd study concentrated on mobile phones and a different user group. Three groups of Finnish high school students discussed the personalization of their mobile phones. Transcripts of these discussions were coded using the categories derived from the 1st study and some small refinements were made to the theory in the light of what was said. Some additional categories were added; otherwise, the theory was supported. In addition, 3 independent coders, naive to the theory, analyzed the transcripts of 1 discussion group each. A high degree of agreement with the investigators' coding was demonstrated. In the 3rd study, a heterogeneous sample of 8 people who used the Internet for leisure purposes were visited in their homes. The degree to which they had personalized their PCs was found to be well predicted by the dispositions in the theory. Design implications of the theory are discussed.

GRRR I cannot get the pdf (registration required)

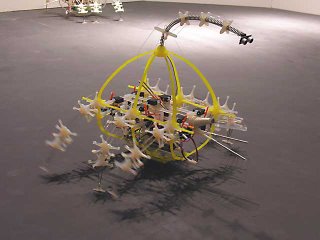

(picture via

(picture via