Switching this TV from 2D to 3D needs some explanations that a person instantiated with this post-it notes. (Seen at CERN last week).

Switching this TV from 2D to 3D needs some explanations that a person instantiated with this post-it notes. (Seen at CERN last week).

Fingerprints on touch interface as interaction pattern

An interesting project by Design Language:

"Because the primary input method of the iPad is a single piece of multitouch glass, developers have incredible flexibility to design unique user interfaces. It’s hard to appreciate the variety of UIs though, since turning the screen off removes virtually all evidence of them. To spotlight these differences, I looked at the only fragments that remain from using an app: fingerprints."

Why do I blog this? It's interesting to observe how this quick hack enables to observe touch interface usage patterns very easily. The idea here is to benefit from physical traces to describe the accumulation of interaction over time. It would be fun to add a temporal dimension to this.

Saskia Sassen: Talking back to your intelligent city

Much of what is put under the “smart city” umbrella has actually been around for a decade or more. Bit by bit (or byte by byte), we’ve been retrofitting various city systems and networks with devices that count, measure, record, and connect. (...) The current euphoria, however, centers around a more costly, difficult-to-implement vision. Rather than retrofitting old cities, the buzz today is about building entire smart cities from scratch in a matter of a few years (hence the alternative name “instant city”)

(...)

The first phase of intelligent cities is exciting. (...) The act of installing, experimenting, testing, or discovering—all of this can generate innovations, both practical and those that exist mainly in the minds of weekend scientists. (...) But the ensuing phase is what worries me; it is charged with negative potentials. From experimentation, discovery, and open-source urbanism, we could slide into a managed space where “sensored” becomes “censored.

(...)

The challenge for intelligent cities is to urbanize the technologies they deploy, to make them responsive and available to the people whose lives they affect. Today, the tendency is to make them invisible, hiding them beneath platforms or behind walls—hence putting them in command rather than in dialogue with users. One effect will be to reduce the possibility that intelligent cities can promote open-source urbanism, and that is a pity. It will cut their lives short. They will become obsolete sooner. Urbanizing these intelligent cities would help them live longer because they would be open systems, subject to ongoing changes and innovations. After all, that ability to adapt is how our good old cities have outlived the rise and fall of kingdoms, republics, and corporations.

Why do I blog this? Some interesting elements, to be considered after the series of workshop we had at Lift11 this week (about smart cities and the use of urban data).

We just had a great lift11, more about it later

Henk Hofstra's Blue Road as a city waterway

Last April, Henk Hofstra created an "urban river" in Drachten, Holland. The Blue Road installation is an example of what mind-blowing urban public art can be.

Featuring 1000 metres of road painted blue and the phrase "Water is Life" written in eight-metre-high letters across it, the Blue Road is reminiscent of the waterway that used to be where the road is now. It's a memorial to nature, but it's also just plain awe-inspiring. There's even a few cool tidbits along the road, like a sinking car.

Sifteo: networked cubes as a game system

Sifteo cubes are a true game system. Each cube is equipped with a full color display, a set of sensing technologies, and wireless communication. During gameplay, the cubes communicate with a nearby computer via the USB wireless link. Manage your games and buy new ones using the Sifteo application installed on your computer. Each cube packs a full color LCD, a 3D motion sensor, wireless communication, a peppy CPU and more. Your computer connects to the cubes via the included Sifteo USB wireless link.

Why do I blog this? this seems to be an intriguing "networked object" platform. I like the idea of creating applications/gameplay based on the interactions between several cubes.

An impressive series of Virtual reality helmet drawings found in the US patents:

Virtual reality interactivity system and method by Justin R. Romo (1991):

Helmet for providing virtual reality environments by Richard Holmes (1993)

Optical system for virtual reality helmet by Ken Hunter (1994):

Virtual reality visual display helmet by Bruce R. Bassett et al. (1996):

Helmet mounting device and system by Andrew M. Ogden (1996):

Virtual reality exercise machine and computer controlled video system by Robert Jarvik (1996)

Virtual reality system with an optical position-sensing facility by Ulrich Sieben (1998):

Multiple viewer headset display apparatus and method with second person icon Michael DeLuca et al (2002):

Visual displaying device for virtual reality with a built-in biofeedback sensor by Sun-II Kim et al (2002):

Virtual reality helmet by Travis Tadysak (2002):

System for combining virtual and real-time environments by Edward N. Bachelder et al (1997):

Why do I blog this? Working on a potential chapter in my book concerning recurring failures of digital technologies led me to investigate patents about VR. As usual when I dig Google Patent, I am fascinated by the graphics (drawing styles) and how much it reveals about design preconceptions. There's a lot to draw from these... especially about what the "inventors" (this term may look anachronistic but it's the one employed in the patent system) bring up in the graphics. Moreover, it's also great to see the different shapes that has been proposed (of course the patents are not just about shapes and design). These elements puts the current 3D glasses discussion in perspective.

Proximeter: an ambient social navigation instrument (H. Holtzman + J. Kestner)

"Would you know if a dear, but seldom seen, friend happened to be on the same train as you? The proximeter is both an agent that tracks the past and future proximity of one’s social cloud, and an instrument that charts this in an ambient display. By reading existing calendar and social network feeds of others, and abstracting these into a glanceable pattern of paths, we hope to nuture within users a social proprioception and nudge them toward more face-to-face interactions when opportunities arise."

Why do I blog this? although I am not sure about the use case (and how it may lead to some new quirky social behavior), I find interesting FOR ONCE to see a location-awareness device that is not focused on the present/real-time. The idea here is rather to give some information about the near future... and I would find interesting to have this sort of representation only as am ambient display at home: no mobile version, only some simple insights before heading elsewhere.

ThingM Project Feature: Books with Personality

The intent of this project was to create animism in an object, with the use of programmable BlinkM® LEDs. We were interested in books because, as a set of objects, they still had a degree of individuality which we wanted to bring forward. By accentuating the character that the titles already exuded, we were able to develop each personality in unique ways, furthering the books from their common mass–produced ancestry. This experiment came close to becoming a psychoanalysis of an object–exploring themes of ego, vulnerability, intellect, and self-awareness.

Why do I blog this? curious project by Jisu Choi and Matt Kizu (from Art Center) about books and how they can be enriched with "Individuality" features through technology.

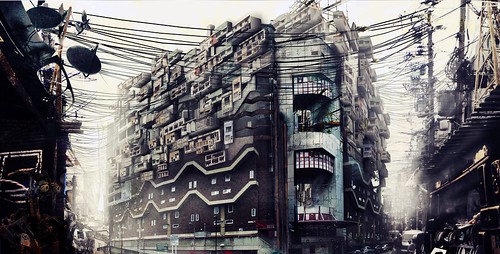

Brixton High Street: urban design for robots

An interesting project by Kibwe X-Kalibre Tavares:

An interesting project by Kibwe X-Kalibre Tavares:

"These are a collection of images of what Brixton could be like if it were to develop as a disregarded area inhabited by London's new robot workforce. Built and design to do all the task humans no longer want to do. The population of brixton has rocketed and unplanned cheap quick additions have been made to the skyline.

Why do I blog this? designing of an urban environment (fictional or not) to accomodate robots seems to be a rather interesting brief. Surely some good design fictions can be built from there to reflect the possibilities of the future(s). The project blog is full of interesting material.

Mechanical faces in Sevilla (with a different number of eyes)

As soon as you see this kind of gas counter in Spain, you start noticing that its design is pretty similar to a face:

A face likes this:

And then you see a similar device with 3 counters, which you recognize as a "3-eyed face" (see also the native american version):

Later on, you encounter one-eyed faces such as:

Or the wounded version:

Why do I blog this? These observations are close to a phenomena we described with Fabien in Sliding Friction: Mistaking an interface or a device for a face corresponds to a psychological phenomenon called “pareidolia”: a type of illusion or misperception involving a vague or obscure stimulus perceived as something clear and distinct.

Recently, I also dealt with the link between this and design... showing how Gerty, the robot in sci-fi movie Moon had a pretty basic face cued by a display that showed a smiley. It's fascinating to see how very minimal features can trigger (1) a face-like appearance, (2) rough forms of emotions that individuals can project on the device.

Smart Cities: how to move from here to there? | Lift11 workshop

Workshop theme:Ubiquitous computing: Augmented Reality, location-based services, internet of things, urban screens, networked objects and robots

Over the past few years, "Smart Cities" have become a prominent topic in tech conferences and press. Apart from the use of this term for marketing purposes, it corresponds to a mix of trends about urbanisation: the use of Information and Communication Technologies to create intelligent responsiveness or optimization at the city level, the coordination of different systems to achieve significant efficiencies and sustainability benefits, or the fact that cities provide "read/write" functionality for its citizens.

The common approach to design Smart Cities is to start by upgrading the infrastructure first and consider the implications afterward. We will take a different path in our workshop and focus on situations that imply already existing and installed technologies (from mobile phones to user-generated content and social networking sites). To follow up on past Lift talks (Nathan Eagle at Lift07, Adam Greenfield at Lift08, Dan Hill, Anne Galloway and Carlo Ratti at Lift09, Fabien Girardin at Lift France 09) and workshops (about urban futures at Lift07 and Lift09), the goal of this session will be to step back and collectively characterize what could be a Smart City. This workshop targets researchers, designers, technology experts interested in developing an alternative approaches to technology driven visions of urban environments.

Co-organizers: Nicolas Nova, Vlad Trifa and Fabien Girardin.

A workshop I'd be organizing at Lift with Vlad and Fabien.

Temporary mailbox in a phone booth

A curious cardboard mailbox inserted in a phone booth in Lyon, France. It seemed to be an for a foundation that helps homeless people (it subsequently plays on the idea that phone booth can become communication nodes for them).

A curious cardboard mailbox inserted in a phone booth in Lyon, France. It seemed to be an for a foundation that helps homeless people (it subsequently plays on the idea that phone booth can become communication nodes for them).

What happens in an interaction design studio

There's an interesting short article by Bill Gaver in the latest issue of ACM interactions. Beyond the focus of the research, I was interested by the description of the approach and the vocabulary employed. Very relevant to see how they define what "design research" can be.

He starts off by stating that their place is "a studio, not a lab": it's an interdisciplinary team (product and interaction design, sociology and HCI) and that they "pursue our research as designers". Speaking about the "design-led" approach, here's the description of how they conduct projects:

"Our designs respond to what we find by picking up on relevant topics and issues, but in a way that involves openness, play, and ambiguity, to allow people to make their own meanings around them. (...) An essential part of our process is to let people try the things we make in their everyday environments over long periods of time—our longest trial so far is over a year—so we can see how they use them, what they find valuable, and what works and what doesn't. (...) Over the course of a project, we tend to concentrate on crafting compelling designs, without distracting ourselves by thinking about the high-level research issues to which they might speak. It's only once our designs are done and field trials are well under way that we start to reflect on what we have learned. Focusing on the particular in this way helps us ensure that our designs work in the specific situations for which they're developed, while remaining confident that in the long run they will produce surprising new insights about technologies, styles of interaction, and the people and settings with whom we work—if we've done a good job in choosing those situations."

Why do I blog this? Collecting material for a project about what is design research. Even brief, the article is interesting as it describes studio life in a very casual way (I'd be curious to read the equivalent from a hardcore science research lab btw). The description Gaver makes is al relevant as it surfaces important aspects of studio life (prerequisites to design maybe): interdisciplinary at first (and then "most of the studio members have picked up other skills along the way"), fluidity of roles, the fact that members contribute to projects according to their interests and abilities.

What robots are

As a researcher interested in human-technology interface, I have always been intrigued by robotics. My work in the field has been limited to several projects here and there: seminars/workshops organization about it, the writing of a research grant about human-robot interaction for game design (a project I conducted two years ago in France) and tutoring design students at ENSCI on a project about humanoid robots. If we include networked objects/blogjects in the robot field (which is not obvious for everyone), Julian and I had our share of work with workshops, talks and few prototypes at the near future laboratory.

Robolift, the new conference Lift has launched with French partners, was a good opportunity to go deeper in the field of robotics. What follows is a series of thoughts and notes I've taken when preparing the first editorial discussions.

"I can't define a robot, but I know one when I see one" Joseph Engelberger

The first thing that struck me as fascinating when it comes to robots is simply the definition of what "counts" as a robot for the people I've met (entrepreneurs in the field, researchers, designers, users, etc.) For some, an artifact is a robot only if it moves around (with wheels or foot) and if it has some powerful sensor (such as a camera). For other, it's the shape that matters: anthropomorphic and zoomorphic objects are much more "robotic" than spheres.

Being fascinated by how people define what is a robot and what kind of technical objects count as a robot, I often look for such material on the World Wide Web. See for example this collection of definitions and get back here [yes this is a kind of hypertext reading moment]. The proposed descriptions of what is a robot are all focused on different aspects and it's curious to see the kinds of groups you can form based on them. Based on the quotes from this website, I grouped them in clusters which show some characteristics:

What do these clusters tell us anyway? Of course, this view is limited and we cannot generalize from it but there are some interesting elements in there:

- The most important parameters to define a robot revolve around its goal, its mode of operation, its physical behavior, its shape and technical characteristics.

- Technical aspects seems less important than goal and mode of operation

- Defining a robot by stating what it isn't is also relevant

- While there seems to be a consensus on the appearance (humanoid!), goals and modes of operations are pretty diverse.

- Certain aspects are not considered here: non-humanoid robots, software bots, block-shaped, etc.

It's interesting to contrast these elements with results from a research study about the shapes of robot to come (which is a topic we will address at the upcoming Robolift conference).

I found this on BotJunkie few months ago, I ran across this interesting diagram extracted from a 2008 study from the Swiss Federal Institute of Technology in Lausanne:

"Researchers surveyed 240 people at a home and living exhibition in Geneva about their feelings on robots in their lives, and came up with some interesting data, including the above graph which shows pretty explicitly that having domestic robots that look like humans (or even “creatures”) is not a good idea, and is liable to make people uncomfortable."

Some of the results I find interesting here:

"Results shows that for the participants in our survey a robot looks like a machine, be it big or small. In spite of the apparent popularity of Japanese robots such as the Sony Aibo, the Furby and Asimo, other categories (creature, human, and animal) gathered only a small percentage. (...) The preferred appearance is therefore very clearly a small machine-like robot"

These results are consistent with another study by Arras and Cerqui. These authors looked at whether people would prefer a robot with a humanoid appearance. They found that "47% gave a negative answer, 19% said yes, and 35% were undecided"".

Why do I blog this? Preparing the upcoming robolift conference and accumulating references for potential projects about human-robot interactions.

Steel and discarded electronics collection

As usual, observing people who collect steel pieces, metal parts and discarded electronics will never cease to interest me. This picture depicts a guy I spotted last week in Sevilla, Spain. As the quantity of manufactured artifacts made is increasing, the fact that some folks toss them on the street make this kind of re-collection pertinent for certain people... who will re-use this and sell them to other parties.

User's involvement in location obfuscation with LBS

Exploring End User Preferences for Location Obfuscation, Location-Based Services, and the Value of Location is an interesting paper written by Bernheim Brush, John Krumm, and James Scott from Microsoft Research. The paper presents the result from a field study about people’s concerns about the collection and sharing of long-term location traces. To do so, they interviewed 32 person from 12 households as part of a 2-month GPS logging study. The researchers also investigated how the same people react to location "obfuscation methods":

- "Deleting: Delete data near your home(s): Using a non-regular polygon all data within a certain distance of your home and other specific locations you select. This would help prevent someone from discovering where you live.

- Randomizing: Randomly move each of your GPS points by a limited amount. The conditions below ask about progressively more randomization. This would make it harder for someone else to determine your exact location.

- Discretizing: Instead of giving your exact location, give only a square that contains your location. Your exact location could not be determined, only that you were somewhere in the square. This would make it difficult for someone to determine your exact location.

- Subsampling: Delete some of your data so there is gap in time between the points. Anyone who can see your data would only know about your location at certain times.

- Mixing: Instead of giving your exact location, give an area that includes the locations of other people. This means your location would be confused with some number of other people."

Results indicate that;

"Participants preferred different location obfuscation strategies: Mixing data to provide k-anonymity (15/32), Deleting data near the home (8/32), and Randomizing (7/32). However, their explanations of their choices were consistent with their personal privacy concerns (protecting their home location, obscuring their identity, and not having their precise location/schedule known). When deciding with whom to share with, many participants (20/32) always shared with the same recipient (e.g. public anonymous or academic/corporate) if they shared at all. However, participants showed a lack of awareness of the privacy interrelationships in their location traces, often differing within a household as to whether to share and at what level."

Why do I blog this? Gathering material about location-based services, digital traces and privacy for a potential research project proposal. What is interesting in this study is simply that the findings show that end-user involvement in obfuscation of their own location data can be an interesting avenue. From a research point of view, it would be curious to investigate and design various sorts of interfaces to allow this to happen in original/relevant/curious ways.

Primer's explanatory diagram and timelines as a design tool

The blogpost Julian wrote yesterday about Primer reminded of this curious diagram that David Calvo sent me few weeks ago. The diagram is a tentative description of what happens in the movie.

Since the movie is about two engineers who accidentally created a weird apparatus that allows an object or person to travel backward in time, it becomes quite difficult to understand where is who and who is where. The diagram makes it slightly more apparent.

Why do I blog this? Rainy sunday morning thought. Apart from my own fascination for weird timelines, I find this kind of artifact interesting as a design/thinking tool... to think about parallel possibilities, about how an artifact or a situation can have different branches. Taking time as a starting point to think about alternative presents and speculative future is quite common in design as show by James Auger's interesting matrix.

Human reality resides in machines

(Traces of human activity revealed on a building encountered in Malaga, Spain)

(Traces of human activity revealed on a building encountered in Malaga, Spain)

An interesting excerpt from Gilbert Simondon's On The Mode Of Existence of Technical Objects:

"He is among the machines that work with him. The presence of man in regard to machines is a perpetual invention. Human reality resides in machines as human actions fixed and crystalized in functioning structures. These structures need to be maintained in the course of their functioning, and their maximum perfection coincides with their maximum openness, that is, with their greatest possible freedom in functioning. Modern calculating machines are not pure automata; they are technical beings which, over and above their automatic adding ability (or decision-making ability, which depends on the working of elementary switches) possess a very great range of circuit- commutations which make it possible to program the working of the machine by limiting its margin of indetermination. It is because of this primitive margin of indetermination that the same machine is able to work out cubic roots or to translate from one language to another a simple text composed of a small number of words and turns of phrase."

Why do I blog this? This reminds me of this quote by Howard Becker: "It makes more sense to see artifact as the frozen remains of collective action, brought to life whenever someones uses them" that Basile pointed me few months ago (which is very close to Madeleine Akrich's notion of script described here).

Few days in Spain + Augmented Reality and best wishes for 2011

Currently in Spain, spending few days of vacations till next week-end. A good opportunity to work on a book project (in French, about recurring failures of technologies), read books, take some pictures and test applications such as World lense.

Currently in Spain, spending few days of vacations till next week-end. A good opportunity to work on a book project (in French, about recurring failures of technologies), read books, take some pictures and test applications such as World lense.

In general, I am quite critical with Augmented Reality but in this case, I find the idea quite intriguing. This app is meant to "translate printed words from one language to another with your built-in video camera in real time". Of course, the system does not work all the time and you often get weird translations and tech glitches (especially when pointing at a large quantity of text on a newspaper)... but the use case itself is quite interesting and I am curious to see how such a concept can evolve over time. It's basically relevant to see how an old technology such as OCR has been combined with the ever-increasing quality of cell-phone lenses. Lots of problems to be solved but interesting challenges ahead.

Best wishes for 2011!